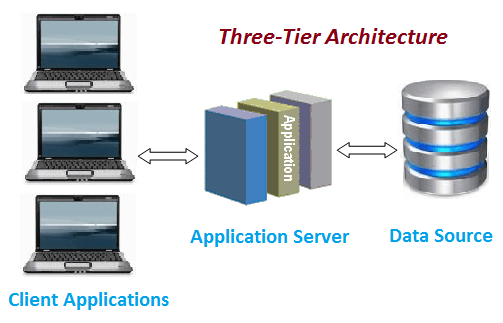

I’ve spent 35+ years of building and deploying internet facing web sites. The front end load balancers and web servers which are tier 1 and sometimes referred to as client application or client tier. Next up is the application server (tier 2) and finally the backend database server (tier 3) or data source. To tie this together in a more understandable way I’ll compare it to a standard WordPress installation, which I believe many will have some familiarity with.

Tier 1 is your web servers and in most cases it will be Apache 2 or nginx. Tier 2 is the application servers and in this example it’s PHP. Tier 3 is the database and to continue the example that would be MySQL or MariaDB in most cases.

In the Linux world of small to medium sized business much of the time a single server is used to contain all three tiers. Though this immensely reduces complexity and decreases a system administrators administrative burden it works against site growth. A time will come as a site grows and the number of users increase that front end latency will start to become an issue. When interacting with the web site performance may suffer or may seem degraded as compared to times when the traffic was moderate. When all three tiers live on the same server CPU, disk and memory IO (input/output) start to contend with each other in direct relation to the traffic load on a web site.

Much of the time the solution to this problem is solved with vertical scaling. Get a bigger and faster server with more memory and faster disk drives and wider IO buses. But this will only go so far and eventually you’ll hit the limit of the fastest machines available on the market.

That’s when horizontal scaling becomes the preferred solution. This is where you split apart your tiers, sometimes into 2, much of the time into 3 separate logical entities. In some infrastructures it makes sense to have the web and application tiers on one server and the database on a separate server. To continue with the example however lets imagine we are using 3 different servers each to contain the tiers. When you horizontally scale an infrastructure in this manner you isolate contention for CPU, memory and IO. This introduces some complexity but to offset that it gives you the ability to accurately determine where the bottleneck in your infrastructure lies. If the web server is the source of contention, you add web servers. If the application server is a bottleneck, you add more application servers. The same goes with the database; when the database server starts thrashing it’s time to add another database server. We’ll sidestep the issue of writing good code (developers get a pass) for the sake of this example.

There’s one more place a bottleneck can appear that I haven’t discussed and that’s the network. This is usually the easiest to fix but is usually the last thing to contend with, so for the purposes of the example, we’ll sidestep this issue as well. It is important enough for mention though.

The final thing I want to touch on is the issue of support. How does that fit in here? Well imagine something is wrong on your web site, so you call your hosting provider and tell them “My web site is broken.” Without going anything further than that this is the same reason when you call a hosting provider there are different ‘tiers’ of support. I mean if you KNEW the problem was with the web server or application server or database server or even the network you’ve given a considerable amount of information towards solving a problem. “My web site is broken,” gives support personnel no information so through their years of experience they’ve learned to break down a problem into more manageable chunks in an effort to isolate the problem. Hence the different level of support ‘tiers’.

Thank you for your time.